Automatically securing white-label sites with TLS using cert-manager

Leo Sjöberg • July 15, 2022

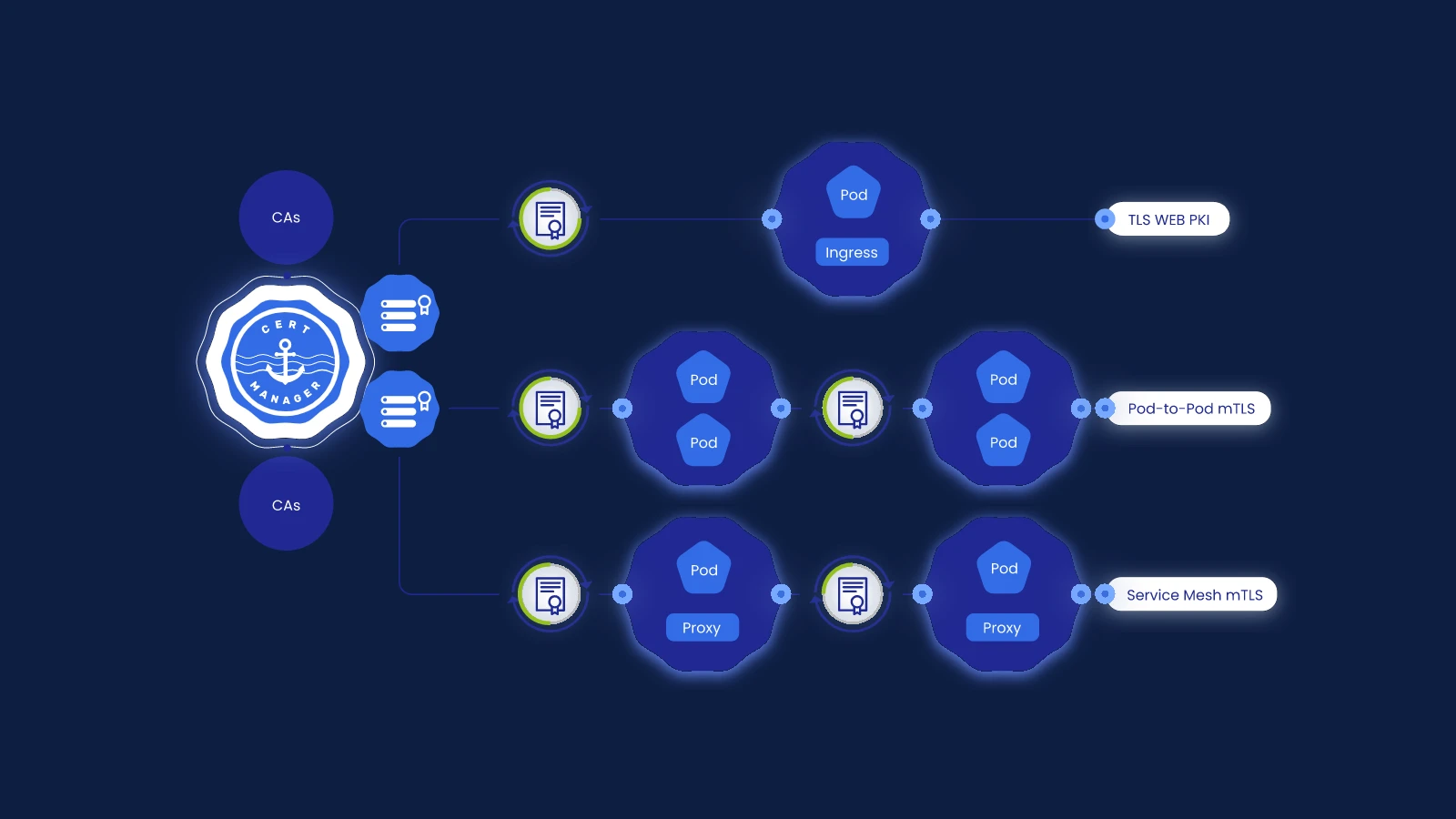

Ingress object and annotations.

Back in 2019, we launched a new offering for our customers - a “job list”. Quite literally a list of jobs for your company. It’s effectively a careers site (think careers.example.com) but stripped down to its core of just showing a list of open positions. We offer this for free to all our customers, and we give everyone their own subdomain, as well as the possibility to use a completely custom domain.

Managing TLS certificates for subdomains is fairly straightforward - all you need is a wildcard certificate and you’re good to go. However, managing certificates, and monitoring all those certificates, for completely custom domains that you don’t control is a more difficult challenge. In this post, we'll look at how we used cert-manager and a small application tied into Kubernetes to achieve full automation of TLS certificates for custom domains, and dig into monitoring in a future post.

When managing TLS certificates for custom domains, there’s a few things to do:

- Keep track of which domains you’re managing (and changes to that list)

- Issue certificates for the domain

- Keep track of when each certificate expires so you can retrieve a new one

- Handling renewal failures (e.g because the customer has left and their DNS no longer points to you)

- (Optionally) Handling site failures. This one relies on you monitoring all customer sites (which you should), and is more about detecting when a customer has changed their DNS, or if your product isn’t working for them.

In this post, we’re gonna explore how cert-manager and Kubernetes can help you handle items 2 and 3, and it’ll at least tell you about renewal failures (4), meaning you only have to deal with keeping track of which domains you’re managing, and of course monitoring the customer sites to make sure they’re all working.

For the uninitiated, cert-manager is a Kubernetes controller1 that simplifies automation and management of TLS certificates via an ACME provider, like Let’s Encrypt. What all these words mean is that you can throw the cert-manager.io/issuer: letsencrypt-prod annotation (or whatever your Issuer is named) onto an ingress resource, and the controller will automatically issue a certificate for whatever domain you’ve specified in the Ingress resource. You can also create a Certificate resource to issue a certificate for whatever domain you want. For the basic ingress use-case, that would look something like this:

Kubernetes "controllers" (1)

A Kubernetes controller is an application that interacts with your cluster to manage objects, in order to reconcile the current state to the desired state. This is often done with Kubernetes' "Custom Resource Definitions", or CRDs, which allows software like cert-manager to expose custom Kubernetes objects likeCertificate alongside

the built-in objects like Pods and Deployments.

1kind: Ingress 2apiVersion: networking.k8s.io/v1 3metadata: 4 name: my-app 5annotations: 6 cert-manager.io/issuer: letsencrypt-prod 7spec: 8 rules: 9 - host: myapp.example.com10 http:11 paths:12 - path: /13 backend:14 service:15 name: my-app16 port:17 number: 8018 tls:19 - hosts:20 - myapp.example.com21 secretName: myapp.example.com-tlsThis is a regular ingress, to forward all inbound traffic for myapp.example.com to the service my-app. In addition to what you'd expect of an ingress resource, you’ll notice there’s been an annotation added, cert-manager.io/issuer: letsencrypt-prod. This tells the cert-manager controller that it should read the ingress to find all hosts declared in the tls section, and create Certificate resources for each of those hosts. Then, cert-manager will go ahead and retrieve certificates from Let’s Encrypt with the http01 verification method, and store them into the secret you associated with the host, in our case myapp.example.com-tls.

Now, I said cert-manager would handle issuing certificates and keeping track of expiry, and it does! You don’t need to do anything more to have automatically managed TLS certificates. However, this doesn’t really solve our issue of custom domains. For that, we need to store the list of domains somewhere, and somehow create ingress objects and certificates for those domains. This will ensure traffic gets routed correctly, and handled via TLS.

At Jobilla, we put a list of all domains into redis, and update that every minute. Our small kubernetes application then reads that, and creates the Ingress objects using the official Kubernetes library for Go2. So let’s dig into the code:

Disclaimer about Redis (2)

Nowadays, I’d probably recommend sending events over Kafka or similar and let the application read that event stream. 1package main 2 3import ( 4 "example.com/dataprovider" 5 "fmt" 6 networkingv1 "k8s.io/api/networking/v1" 7 "k8s.io/apimachinery/pkg/util/intstr" 8 "k8s.io/client-go/kubernetes" 9 "k8s.io/client-go/rest"10 "strings"11)12 13func main() {14 target_svc := os.Args[1]15 data_source, err := dataprovider.New("redis://default:default@localhost:6379")16 domain_list, err := data_source.Get("domains")17 domains := strings.Split(domain_list, ",")18 19 // connect to k8s20 config, err := rest.InClusterConfig()21 22 if err != nil {

23 panic(err)24 }25 26 k8s, err := kubernetes.NewForConfig(config)27 28 if err != nil {

29 panic(err)30 }31 32 newIngress := generateIngressResource("custom-domains", target_svc, domains)33 34 // update or create35 _, err := k8s.NetworkingV1().Ingresses(namespace).Get("custom-domains", v1.GetOptions{})36 37 if err != nil {38 k8s.NetworkingV1().Ingresses("default").Create(ingress)39 } else {40 k8s.NetworkingV1().Ingresses("default").Update(ingress)41 }42}43 44func generateIngressResource(name, service string, domains []string) (*v1.Ingress) {45 rules := make([]networkingv1.IngressRule, 0)46 for _, domain := range domains {47 rules = append(rules, networkingv1.IngressRule{Host: domain})48 }49 50 tls := make([]v1beta1.IngressTLS, 0)51 for _, domain := range domains {52 tls = append(tls, networkingv1.IngressTLS{Hosts: []string{domain}, SecretName: fmt.Sprintf("generated-%s-tls", domain})53 }54 55 return networkingv1.Ingress{56 ObjectMeta: v1.ObjectMeta{57 Annotations: map[string]string{58 "cert-manager.io/issuer": "letsencrypt-prod",59 },60 Name: name,61 Labels: map[string]string{"customDomain": "true"},62 },63 Spec: networkingv1.IngressSpec{64 Backend: &networkingv1.IngressBackend{65 ServiceName: service,66 ServicePort: intstr.FromInt(80),67 },68 Rules: rules,69 TLS: tls,70 },71 }72}dataprovider

In the sample code, I've used example.com/dataprovider. This is a fictional, generic library, which you would replace with

whatever datastore you are using. If you're using MySQL, change to a MySQL driver. If you're relying on Kafka, adapt for Kafka.

Ideally, you rely on an interface such that you could plug in different providers, but building that is outside the scope

of this post.

In the above code, there's a few important things happening. Firstly, we connect to our datastore and retrieve the domains from there.

1

2package main 3 4import ( 5 "example.com/dataprovider" 6 "fmt" 7 networkingv1 "k8s.io/api/networking/v1" 8 "k8s.io/apimachinery/pkg/util/intstr" 9 "k8s.io/client-go/kubernetes"10 "k8s.io/client-go/rest"11 "strings"12)13 14 15func main() {16 target_svc := os.Args[1]17 18 data_source, err := dataprovider.New("redis://default:default@localhost:6379")19 domain_list, err := data_source.Get("domains")20 domains := strings.Split(domain_list, ",") 21 22 // connect to k8s23 config, err := rest.InClusterConfig()24 25 if err != nil {

26 panic(err)27 }28 29 k8s, err := kubernetes.NewForConfig(config)30 31 if err != nil {

32 panic(err)33 }34 35 newIngress := generateIngressResource("custom-domains", target_svc, domains)36 37 // update or create38 _, err := k8s.NetworkingV1().Ingresses(namespace).Get("custom-domains", v1.GetOptions{})39 40 if err != nil {41 k8s.NetworkingV1().Ingresses("default").Create(ingress)42 } else {43 k8s.NetworkingV1().Ingresses("default").Update(ingress)44 }45}46 47func generateIngressResource(name, service string, domains []string) (*v1.Ingress) {

48 rules := make([]networkingv1.IngressRule, 0)49 for _, domain := range domains {50 rules = append(rules, networkingv1.IngressRule{Host: domain})51 }52 53 tls := make([]v1beta1.IngressTLS, 0)54 for _, domain := range domains {55 tls = append(tls, networkingv1.IngressTLS{Hosts: []string{domain}, SecretName: fmt.Sprintf("generated-%s-tls", domain})56 }57 58 return networkingv1.Ingress{59 ObjectMeta: v1.ObjectMeta{60 Annotations: map[string]string{61 "cert-manager.io/issuer": "letsencrypt-prod",62 },63 Name: name,64 Labels: map[string]string{"customDomain": "true"},65 },66 Spec: networkingv1.IngressSpec{67 Backend: &networkingv1.IngressBackend{68 ServiceName: service,69 ServicePort: intstr.FromInt(80),70 },71 Rules: rules,72 TLS: tls,73 },74 }75} From this, we end up with a string slice looking something like ["example.com", "customer.app"].

Next up, we use this list of domains to generate the ingress resource, and this is where most of the magic happens:

1

2package main 3 4import ( 5 "example.com/dataprovider" 6 "fmt" 7 networkingv1 "k8s.io/api/networking/v1" 8 "k8s.io/apimachinery/pkg/util/intstr" 9 "k8s.io/client-go/kubernetes"10 "k8s.io/client-go/rest"11 "strings"12)13 14 15func main() {16 target_svc := os.Args[1]17 18 data_source, err := dataprovider.New("redis://default:default@localhost:6379")19 domain_list, err := data_source.Get("domains")20 domains := strings.Split(domain_list, ",")21 22 // connect to k8s23 config, err := rest.InClusterConfig()24 25 if err != nil {

26 panic(err)27 }28 29 k8s, err := kubernetes.NewForConfig(config)30 31 if err != nil {

32 panic(err)33 }34 35 newIngress := generateIngressResource("custom-domains", target_svc, domains) 36 37 // update or create38 _, err := k8s.NetworkingV1().Ingresses(namespace).Get("custom-domains", v1.GetOptions{})39 40 if err != nil {41 k8s.NetworkingV1().Ingresses("default").Create(ingress)42 } else {43 k8s.NetworkingV1().Ingresses("default").Update(ingress)44 }45}46 47func generateIngressResource(name, service string, domains []string) (*v1.Ingress) {48 rules := make([]networkingv1.IngressRule, 0)49 for _, domain := range domains { 50 rules = append(rules, networkingv1.IngressRule{Host: domain})51 }52 53 tls := make([]v1beta1.IngressTLS, 0)54 for _, domain := range domains { 55 tls = append(tls, networkingv1.IngressTLS{Hosts: []string{domain}, SecretName: fmt.Sprintf("generated-%s-tls", domain})56 }57 58 return networkingv1.Ingress{59 ObjectMeta: v1.ObjectMeta{60 Annotations: map[string]string{ 61 "cert-manager.io/issuer": "letsencrypt-prod",62 },63 Name: name,64 Labels: map[string]string{"customDomain": "true"},65 },66 Spec: networkingv1.IngressSpec{67 Backend: &networkingv1.IngressBackend{68 ServiceName: service,69 ServicePort: intstr.FromInt(80),70 },71 Rules: rules,72 TLS: tls,73 },74 }75} Here, before anything else, it's important to understand why we generate a single ingress resource for all domains, rather than one ingress per domain. This is primarily a resource concern – storing more objects is more taxing on the cluster, and means both the ingress controller and cert-manager have to use more resources to do their work. We initially tried with one ingress resource per domain, but found that it caused problems and had no advantages. It also makes any output from kubectl get ingress extremely messy. Therefore, you're much better off with a single ingress for all domains.

All we do is simply loop over the provided domains and create a TLS and rule entry for each, and then add the required cert-manager.io/issuer annotation to get the certificate issued. For the backend that the ingress targets, we specify whatever service should be Handling

this request. Remember that your backend needs to then handle the domain distinction on its own (e.g by reading the Host request header)!

Lastly, we upsert the ingress resource. We determine which action to take by attempting to retrieve the resource. If retrieval fails,

we know we need to Create, and otherwise Update. Since writing the original code, however, I've learned that you could use the Patch

operation to let Kubernetes itself determine whether to create or update, saving you a bit of code and an API call.

1

2package main 3 4import ( 5 "example.com/dataprovider" 6 "fmt" 7 networkingv1 "k8s.io/api/networking/v1" 8 "k8s.io/apimachinery/pkg/util/intstr" 9 "k8s.io/client-go/kubernetes"10 "k8s.io/client-go/rest"11 "strings"12)13 14 15func main() {16 target_svc := os.Args[1]17 18 data_source, err := dataprovider.New("redis://default:default@localhost:6379")19 domain_list, err := data_source.Get("domains")20 domains := strings.Split(domain_list, ",")21 22 // connect to k8s23 config, err := rest.InClusterConfig()24 25 if err != nil {

26 panic(err)27 }28 29 k8s, err := kubernetes.NewForConfig(config)30 31 if err != nil {

32 panic(err)33 }34 35 newIngress := generateIngressResource("custom-domains", target_svc, domains)36 37 // update or create 38 _, err := k8s.NetworkingV1().Ingresses(namespace).Get("custom-domains", v1.GetOptions{})39 40 if err != nil {41 k8s.NetworkingV1().Ingresses("default").Create(ingress)42 } else {43 k8s.NetworkingV1().Ingresses("default").Update(ingress)44 } 45}46 47func generateIngressResource(name, service string, domains []string) (*v1.Ingress) {

48 rules := make([]networkingv1.IngressRule, 0)49 for _, domain := range domains {50 rules = append(rules, networkingv1.IngressRule{Host: domain})51 }52 53 tls := make([]v1beta1.IngressTLS, 0)54 for _, domain := range domains {55 tls = append(tls, networkingv1.IngressTLS{Hosts: []string{domain}, SecretName: fmt.Sprintf("generated-%s-tls", domain})56 }57 58 return networkingv1.Ingress{59 ObjectMeta: v1.ObjectMeta{60 Annotations: map[string]string{61 "cert-manager.io/issuer": "letsencrypt-prod",62 },63 Name: name,64 Labels: map[string]string{"customDomain": "true"},65 },66 Spec: networkingv1.IngressSpec{67 Backend: &networkingv1.IngressBackend{68 ServiceName: service,69 ServicePort: intstr.FromInt(80),70 },71 Rules: rules,72 TLS: tls,73 },74 }75} In this instance, we've chosen to simply name the ingress custom-domains to make life easy. However, you may of course

want to make this more specific to fit your use-case.

The last thing to do is deploy this. One thing that's a bit special about this code is that it doesn't have any event loop. As such,

it's not a long-running programme. Instead, it's built to be run on whatever frequency you want to synchronise domains, deployed

as a CronJob in Kubernetes. Here it is also worth noting that if you rely on an event stream, like through Kafka, you would instead

likely prefer a long-running process, effectively a Kubernetes controller, that just sits idle while it waits for messages.

Deploying this application is almost like a regular CronJob, with the exception that we need to give our application permissions

to interact with Ingress resources through Kubernetes' RBAC controls.

The CronJob itself doesn't look like anything special:

1apiVersion: batch/v1beta1 2kind: CronJob 3metadata: 4 name: custom-domain-synchronizer 5spec: 6 schedule: "* * * * *" 7 jobTemplate: 8 spec: 9 template:10 spec:11 serviceAccountName: custom-domain-synchronizer12 restartPolicy: Never13 containers:14 - name: app15 image: yourorg/domain-synchronizer:latest16 command: ["/main"]17 args:18 - "my-target-svc"However, we do specify a serviceAccountName here, so let's define that service account, and its permissions:

1apiVersion: v1 2kind: ServiceAccount 3metadata: 4 name: custom-domain-synchronizer 5--- 6apiVersion: rbac.authorization.k8s.io/v1beta1 7kind: Role 8metadata: 9 name: manage-ingresses-custom-domain-synchronizer10rules:11 - apiGroups: ["networking.k8s.io"]12 resources: ["ingresses"]13 verbs: ["create", "get", "update"]14---15apiVersion: rbac.authorization.k8s.io/v1beta116kind: RoleBinding17metadata:18 name: custom-domain-synchronizer19roleRef:20 kind: Role21 apiGroup: rbac.authorization.k8s.io22 name: manage-ingresses-custom-domain-synchronizer23subjects:24 - kind: ServiceAccount25 name: custom-domain-synchronizerThis creates a service account that is able to perform retrieval, creation, and updates on ingress resources.

And at long last, we're at the finish line. That's the whole application, and its deployment. Now, all you need to do is push some domains to your datastore and watch the automation work its magic!

With a Golang application that's only 72 lines long, we've managed to completely automate ingress configuration and TLS certificate management of completely custom domains. When we first built this, we did it because for every new customer, we had to run a manual command to issue the certificate and setup the right ingress rules. This didn't scale well at all. So as developers do, we wrote some code to solve that problem, and now we haven't had to care about those certificates for years!

The only missing piece we had left after this was how we would monitor all our sites... and that's for another time!

Hopefully, you've found this useful and educational, and perhaps it even inspired you to automate a bit more. All code in this post is free to use however you like, so go ahead and experiment!